Non classé

From Nodes to Networks: Graph RAG in Supply Chains – Part 5

Published

5 mois agoon

By

Download the full white paper – AI in the Supply Chain

While Retrieval-Augmented Generation (RAG) improves the accuracy and relevance of AI output by connecting it to structured knowledge, it still treats that knowledge largely as disconnected chunks, pages, paragraphs, or entries retrieved for context. But supply chains are not flat; they are complex, interrelated systems composed of entities, suppliers, facilities, products, regulations, linked by dependencies, risks, and transactions.

To reason across this complexity, the next generation of AI systems integrates RAG with a knowledge graph, resulting in what’s now referred to as Graph RAG.

1. What Is Graph RAG?

Graph RAG combines:

RAG’s retrieval and generation capabilities

A knowledge graph, which models entities (e.g., a supplier, a warehouse, a contract clause) and the relationships between them (e.g., supplies, ships to, depends on, governed by)

Instead of retrieving and processing isolated documents, Graph RAG allows AI to:

Traverse structured relationships

Understand multi-hop dependencies (e.g., “Supplier A → Port B → Distribution Center C”)

Infer risks, consequences, or alternatives based on the shape of the supply network

It shifts AI from document-based reasoning to system-based reasoning.

2. Why Graph Structures Matter in Supply Chains

Supply chains are inherently graph-like:

A single supplier may support multiple products

A port delay affects many downstream orders

A regulation impacts specific trade lanes and product types

Transportation routes, warehouse transfers, and carrier networks form dynamic, high-dimensional graphs

Reasoning through these interconnections is essential to:

Identifying root causes (e.g., “Why is my lead time increasing?”)

Modeling cascading effects (e.g., “If Port Y is congested, how many SKUs are at risk?”)

Finding optimal alternatives (e.g., “Which alternate routes avoid this constraint?”)

Traditional AI systems, even with RAG, struggle to synthesize these answers. Graph RAG is built to navigate them naturally.

3. Applications of Graph RAG in Supply Chains

Disruption Analysis:

A weather event affects a port. The Graph RAG system identifies all inbound shipments, suppliers relying on that port, affected customers, and risk-adjusted mitigation options, automatically.

Strategic Sourcing:

By traversing supplier networks, component relationships, and geographic risks, the system recommends resilient sourcing strategies with minimal overlap or risk concentration.

Compliance Monitoring:

When a new trade regulation is issued, the system identifies which SKUs, suppliers, and trade lanes are affected, using graph traversal and targeted document retrieval.

Inventory Optimization:

Graph RAG helps balance multi-node inventory levels by modeling upstream-downstream interdependencies and lead time fluctuations across the network.

Carbon Emissions Modeling:

AI agents compute scope 3 emissions based on transport paths, vendor locations, and material movements, all modeled as a directed graph.

4. Architecture: How Graph RAG Works

Knowledge Graph Construction:

Nodes: Entities such as locations, shipments, contracts, or people

Edges: Relationships such as “ships to,” “depends on,” “complies with”

Data sources: ERP, TMS, WMS, procurement systems, regulatory bodies, supplier portals

Graph-Aware Retrieval:

Instead of searching flat documents, the AI traverses the graph to identify related nodes and fetches only the most relevant facts.

Context Injection into Generation:

Retrieved graph-structured facts are then passed to the language model, which generates a response that is not just informed, but relationally aware.

Ongoing Updates:

Graphs are continuously updated through APIs and event streams (e.g., a delayed container updates the edges related to dependent orders and downstream production).

Tools used may include:

Neo4j or Amazon Neptune for graph storage

LangChain, Haystack, or LlamaIndex for RAG orchestration

Vector databases (e.g., Pinecone, Weaviate) for parallel text-based retrieval

5. Key Benefits of Graph RAG

Holistic Insight: Understand system-wide impacts of localized disruptions

Explainability: Trace decisions across linked entities and interactions

Precision: Retrieve the exact information relevant to a network scenario

Scalability: Manage large-scale networks with millions of relationships

Proactivity: Identify risks, chokepoints, or opportunities before they escalate

6. Limitations and Design Considerations

Graph Construction Complexity: Requires a well-governed master data model and consistent entity resolution

System Integration: Must span across ERP, WMS, CRM, and external data feeds

Latency and Compute Load: Traversing large graphs in real time can be resource-intensive

Change Management: Stakeholders must trust a system making decisions across dozens of linked domains

Despite these hurdles, Graph RAG offers a substantial leap forward in AI’s ability to navigate the interconnected nature of modern supply chains.

Microsoft is incorporating graph-based models in its Copilot for Dynamics 365, enabling richer context in supply chain planning and customer service.

SAP Business AI has introduced early-stage graph traversal features for production planning and logistics scenario modeling.

Global logistics providers are experimenting with Graph RAG to assess port congestion impacts and reroute traffic across multimodal networks.

Graph RAG represents a convergence of structured reasoning and unstructured understanding, the first real step toward AI systems that don’t just answer questions but operate like experienced supply chain managers, constantly weighing options and interdependencies.

But this intelligence can’t operate in a vacuum. It depends on well-prepared data and unified system infrastructure, which brings us to the topic of data harmonization.

[Download AI in the Supply Chain](https://logisticsviewpoints.com/download-the-ai-in-the-supply-chain-white-paper/)

The post From Nodes to Networks: Graph RAG in Supply Chains – Part 5 appeared first on Logistics Viewpoints.

You may like

Non classé

Supply Chain and Logistics News February 23rd- 26th 2026

Published

1 jour agoon

27 février 2026By

This week’s supply chain landscape is defined by a massive push to bridge the gap between having data and actually using it. From the high-stakes legal battle over billion-dollar tariffs to a radical AI-driven workforce restructuring at WiseTech Global, the industry is moving past simple visibility toward a period of high-consequence execution. Whether it is the Supreme Court’s intervention in trade policy or the operationalization of decision intelligence showcased at the 30th Annual ARC Forum, the recurring theme is clear: the next competitive advantage belongs to those who can synchronize their technology, their inventory, and their legal strategies in real time. In this edition, we break down the four critical shifts—architectural, legal, operational, and structural—shaping the final days of February 2026.

Your News for the Week:

The Technology Gap: Why Supply Chain Execution Still Isn’t Fully Connected Yet

Richard Stewart of Infios argues that the primary technology gap in modern supply chain execution is not a lack of ambition or budget, but rather an architectural failure. Most existing systems, such as WMS and TMS, are designed to optimize within their own silos, leaving a critical disconnect during real-time disruptions where manual workarounds and spreadsheets are still required to coordinate responses. Citing the Supply Chain Execution Readiness Report, Richard highlights that 69% of leaders struggle with data quality and integration, driving a shift in buying criteria toward interoperability and real-time visibility. Ultimately, Richard suggests that the next competitive advantage will belong to organizations that move beyond simple visibility toward “connected execution,” prioritizing modular architectures that synchronize decisions across the entire operational landscape rather than just reporting on them.

FedEx sues the US Government, seeking a full refund over Trump Tariffs

FedEx has officially filed a lawsuit against the US government, seeking a full refund for duties paid under the Trump administration’s recent tariff policies. The move follows a landmark 6-3 Supreme Court ruling that found the president overstepped his authority by using emergency powers to bypass Congress’s sole power to levy taxes. While the court’s decision stopped the specific enforcement mechanism, it left the status of the estimated $175 billion already collected in limbo. As the first major carrier to seek reimbursement, FedEx’s legal challenge could set a precedent that could affect the logistics industry and thousands of other importers currently navigating a volatile trade environment.

From Hidden Inventory to Returns Recovery: Exposing Operational Blind Spots

Hiu Wai Loh sheds light on the hidden inventory crisis and the costly returns black hole that plagues supply chains long after peak season ends. The research reveals that a staggering number of organizations suffer from fragmented data, leading to false stockouts and millions of dollars trapped in reverse logistics limbo. To overcome these operational blind spots, the author argues that companies must tear down silos and adopt a unified, real-time inventory model. By leveraging AI-driven smart disposition, businesses can efficiently route returns to their most profitable next destination, transforming a traditional cost center into a powerful engine for full-price recovery and year-round agility.

Avantor and Aera Technology were present at the 30th Annual ARC Forum and presented on how they are operationalizing Decision Intelligence. They explore how modern supply chains are navigating the paradox of increasing global disruptions alongside record-breaking operational efficiency. By highlighting a case study from Avantor, the presentation demonstrated how Decision Intelligence (DI) can move beyond theoretical AI to automate thousands of routine daily decisions, such as stock rebalancing and purchase order prioritization. The key takeaway from the ARC Advisory Group’s 30th Leadership Forum is that companies should focus on “change-ready” solutions that solve immediate, high-impact problems rather than waiting for perfect data or fully autonomous systems.

WiseTech Global Cutting 30% of Workforce in AI restructure:

WiseTech Global, the developer of the CargoWise platform, has announced a major two-year restructuring plan that will involve cutting approximately 2,000 jobs, or 29% of its global workforce. This strategic pivot aims to integrate artificial intelligence deeper into both its internal operations and its customer-facing software, which currently handles a massive 75% of global customs transaction data. The layoffs are expected to hit the company’s U.S. cloud division, E2open, particularly hard, with some reports suggesting cuts of up to 50% there. This move comes at a turbulent time for the Australian tech giant, as it seeks to regain investor confidence following a 68% drop in share price since late 2024 amid leadership controversies and shifting market dynamics.

Song of the week:

The post Supply Chain and Logistics News February 23rd- 26th 2026 appeared first on Logistics Viewpoints.

Non classé

Burger King’s AI “Patty” Moves AI Into Frontline Execution

Published

2 jours agoon

26 février 2026By

Burger King is piloting an AI assistant called “Patty” inside employee headsets as part of its broader BK Assistant platform. This is not a marketing chatbot. It is an operational system embedded into restaurant execution.

Patty supports crew members with preparation guidance, monitors equipment status, and analyzes customer interactions for defined service language such as “please” and “thank you.” Managers can query performance metrics tied to service quality in real time.

The architecture matters more than the novelty.

AI Inside the Operational Core

Patty is integrated with a cloud based point of sale system. That connection allows:

near real time inventory updates across channels

equipment downtime alerts

synchronized digital menu adjustments

structured service quality measurement

If a product goes out of stock or a machine fails, availability can be updated across kiosks, drive through boards, and digital systems within minutes.

This is AI operating inside the transaction layer, not sitting above it.

Earlier fast food AI experiments focused on automated drive through ordering. Burger King is more measured there. The more consequential shift is internal execution intelligence.

Efficiency, Visibility, and Risk

Across retail and logistics sectors, AI agents are being embedded directly into workflows to standardize performance and compress response times. The value comes from integration and coordination, not conversational capability.

At the same time, customer sentiment toward fully automated service remains mixed. Privacy, workforce implications, and over automation risk are active concerns. As AI begins monitoring tone and behavior, governance becomes part of the deployment decision.

Operational AI improves visibility. It also expands accountability.

Implications for Supply Chain and Operations Leaders

Three themes emerge:

Execution instrumentation – AI is now measuring soft metrics and converting them into structured operational data.

Closed loop response – When connected to POS and inventory systems, AI can both detect issues and trigger corrective updates.

Governance at scale – Embedding AI at the edge requires clear oversight, performance auditability, and workforce alignment.

Burger King plans to expand BK Assistant across U.S. restaurants by the end of 2026, with Patty currently piloting in several hundred locations.

This is not a fast food curiosity. It is a signal.

AI is moving from analytics to execution. From dashboards to headsets. From advisory tools to operational participants.

For supply chain leaders, the question is no longer whether AI will enter frontline operations. The question is how intentionally it will be architected and governed once it does.

The post Burger King’s AI “Patty” Moves AI Into Frontline Execution appeared first on Logistics Viewpoints.

Non classé

AI and Enterprise Software: Is the “SaaSpocalypse” Narrative Overstated?

Published

2 jours agoon

26 février 2026By

Capital is rotating. Growth has given way to value, and within technology the divergence is increasingly pronounced. While broad indices have stabilized, many software names have not. Since late 2025, software equities have materially underperformed other parts of the technology complex. Forward revenue growth across many mid-cap SaaS firms has slowed from prior expansion levels, net retention rates have edged down in several categories, and valuation multiples have compressed accordingly. Markets are repricing both growth durability and margin structure.

The prevailing explanation is straightforward. Generative AI lowers barriers to entry, reduces the cost of building applications, and compresses differentiation. If application logic becomes easier to produce, competitive intensity increases and pricing power weakens. The result is visible not only in equity valuations, but in moderated expansion rates and tighter forward guidance. There is substance behind that concern. But reducing enterprise software economics to code production misses where the structural leverage in these platforms actually resides.

The Core Bear Case

The bearish thesis rests on three related propositions: AI commoditizes application logic, accelerates competitive entry, and pressures margins. If enterprises can generate software dynamically, recurring subscription models face structural pressure. If workflows can be automated through agents, reliance on fixed applications may decline. If code becomes less scarce, incumbents may struggle to defend premium multiples.

The repricing in software reflects these risks. Multiples have compressed meaningfully, and growth expectations have moderated across several verticals. In certain categories, retention softness suggests substitution pressure is already emerging. These signals should not be dismissed as temporary volatility.

At the same time, equating software value solely with feature output or code generation is a simplification. Enterprise software durability rarely rests on feature sets alone.

What Enterprise Software Actually Represents

In supply chain environments, systems function as operational coordination layers rather than isolated applications. Transportation management systems, warehouse platforms, planning suites, and multi-enterprise visibility networks sit at the center of integrated transaction flows. They embed years of configuration, exception handling logic, compliance mappings, and cross-functional workflows. Over time, they accumulate operational data that informs sourcing, forecasting, transportation optimization, and execution decisions across the enterprise.

Replacing those systems is not equivalent to generating new code. It requires rebuilding institutional memory, re-establishing integration points, and re-validating compliance controls across internal and external stakeholders. The switching cost is not interface retraining; it is operational re-architecture.

In our research on AI system design in supply chains

AI in the Supply Chain-sp

, the recurring conclusion is that structural advantage stems from coordination, persistent context, and integration density. Model capability matters. Economic durability flows from how systems connect and govern activity across distributed networks. That distinction is central to evaluating enterprise software in the current environment.

Where Risk Is Real

Not all software categories have equivalent structural protection. Risk is most evident in narrowly defined vertical tools, lightweight workflow utilities, and productivity-layer applications with limited proprietary data accumulation. In these segments, generative models can replicate core functionality with relatively low switching friction. Pricing pressure can intensify quickly, and margin compression may prove structural rather than cyclical.

By contrast, enterprise workflow orchestration platforms deeply embedded in core business processes create operational dependency. Replacing them requires redesigning process architecture, not simply substituting interfaces. Systems that accumulate years of transaction data, customization layers, and ecosystem integrations generate switching costs that extend beyond feature parity. Observability and monitoring platforms that collect continuous telemetry function as operational infrastructure; as AI agents proliferate, the need for measurement, traceability, and governance increases rather than declines.

In supply chain software specifically, planning platforms and transportation orchestration systems accumulate integration density over time. That density represents economic friction against displacement and reinforces durability when market volatility increases.

AI as Architectural Pressure

AI will alter software economics. It will increase development intensity, shorten product cycles, and compress margins in commoditized segments. Vendors operating at the surface layer of functionality will face sustained pressure.

However, AI simultaneously increases coordination complexity. As autonomous agents proliferate, enterprises require more governance controls, more integration layers, and more persistent contextual memory. The economic question shifts from “Who can build features fastest?” to “Who can coordinate distributed intelligence most reliably?”

Agent-to-agent communication, contextual memory frameworks, retrieval-based reasoning, and graph-aware modeling are becoming foundational design considerations in supply chain environments, as described in ARC’s white paper AI in the Supply Chain: Architecting the Future of Logistics. Vendors capable of governing these interactions at scale may strengthen their structural position. Vendors confined to interface-layer differentiation may see pricing pressure intensify. The outcome is not uniform decline; it is structural differentiation within the sector.

Valuation vs. Structural Impairment

Markets reprice sectors quickly when uncertainty rises. The current adjustment reflects legitimate concerns: slower growth trajectories, reduced retention durability, increased competitive intensity, and rising research and development requirements. These are measurable economic factors.

The open question is whether valuations reflect permanent impairment across enterprise software broadly, or whether the market is failing to distinguish between commoditized applications and structurally embedded coordination platforms.

Some observers argue that AI may ultimately expand the addressable market for enterprise systems rather than compress it. As AI adoption increases, enterprises may require additional orchestration frameworks, governance layers, and system-level controls. In that scenario, platforms with embedded workflows and distribution reach could see increased strategic relevance. The impact will vary materially by category and architectural depth.

In supply chain markets, complexity is not declining. Cross-border regulation is tightening, network volatility remains elevated, and multi-enterprise coordination is becoming more demanding. Economic value accrues to platforms that integrate and govern transactions, not to those that merely present information.

Implications for Enterprise Buyers

For supply chain leaders, the relevant issue is not short-term equity performance but architectural positioning. Does the platform function as a system of record embedded in transaction flows, or as a reporting layer adjacent to them? How deeply is it integrated into compliance processes, procurement logic, and transportation execution? Does it accumulate proprietary operational data that reinforces switching costs over time? Is it evolving toward coordinated AI architectures, or layering assistive tools onto a static foundation?

AI will not eliminate enterprise systems. It will expose those whose economic value rests primarily on surface functionality rather than integration depth.

A Measured Conclusion

The current narrative captures real pressure within segments of the software sector, but it does not fully account for structural differentiation. Certain categories face sustained pricing compression where differentiation is shallow and switching friction is low. Others may strengthen as AI increases coordination demands, governance requirements, and integration complexity.

The decisive factor will not be branding or feature velocity. It will be integration density, data gravity, and the ability to coordinate distributed intelligence across enterprise and partner networks. In supply chain contexts, platforms that govern transactions, maintain contextual continuity, and orchestrate multi-node operations retain structural advantage. Platforms that merely automate isolated tasks face a more uncertain economic trajectory.

That distinction, rather than headline narrative, will determine long-term outcomes.

_______________________________________________________________________________

Download the Full Architecture Framework

A2A is only one component of a broader intelligent supply chain architecture. For a structured analysis of how A2A integrates with context-aware systems, retrieval frameworks, graph-based reasoning, and data harmonization requirements, download the full white paper:

The paper outlines the architectural model, governance considerations, and practical implementation path for enterprises building connected intelligence across their supply networks.

Download the white paper to explore the complete framework.

The post AI and Enterprise Software: Is the “SaaSpocalypse” Narrative Overstated? appeared first on Logistics Viewpoints.

Supply Chain and Logistics News February 23rd- 26th 2026

Burger King’s AI “Patty” Moves AI Into Frontline Execution

AI and Enterprise Software: Is the “SaaSpocalypse” Narrative Overstated?

Walmart and the New Supply Chain Reality: AI, Automation, and Resilience

Ex-Asia ocean rates climb on GRIs, despite slowing demand – October 22, 2025 Update

13 Books Logistics And Supply Chain Experts Need To Read

Trending

-

Non classé12 mois ago

Non classé12 mois agoWalmart and the New Supply Chain Reality: AI, Automation, and Resilience

- Non classé4 mois ago

Ex-Asia ocean rates climb on GRIs, despite slowing demand – October 22, 2025 Update

- Non classé7 mois ago

13 Books Logistics And Supply Chain Experts Need To Read

- Non classé1 mois ago

Container Shipping Overcapacity & Rate Outlook 2026

- Non classé4 mois ago

Ocean rates climb – for now – on GRIs despite demand slump; Red Sea return coming soon? – November 11, 2025 Update

- Non classé1 an ago

Unlocking Digital Efficiency in Logistics – Data Standards and Integration

-

Non classé6 mois ago

Non classé6 mois agoBlue Yonder Acquires Optoro to Revolutionize Returns Management

-

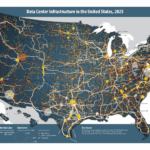

Non classé5 mois ago

Non classé5 mois agoNavigating the Energy Demands of AI: How Data Center Growth Is Transforming Utility Planning and Power Infrastructure